- The AI Entrepreneurs

- Posts

- 👗 Try On Outfits with Google’s AI Doppl

👗 Try On Outfits with Google’s AI Doppl

👗🔍🎶 See AI Fashion, Research APIs & Music Creation Breakthroughs

Welcome to AI Entrepreneurs

AI’s Transforming Fast—Keep Up!

From Google’s Doppl style try-on to OpenAI’s Deep Research API, this issue dives into mobile-first AI, music production with Suno, and NVIDIA’s healthtech revolution. Plus: cinematic video tools and pro headshots made easy!

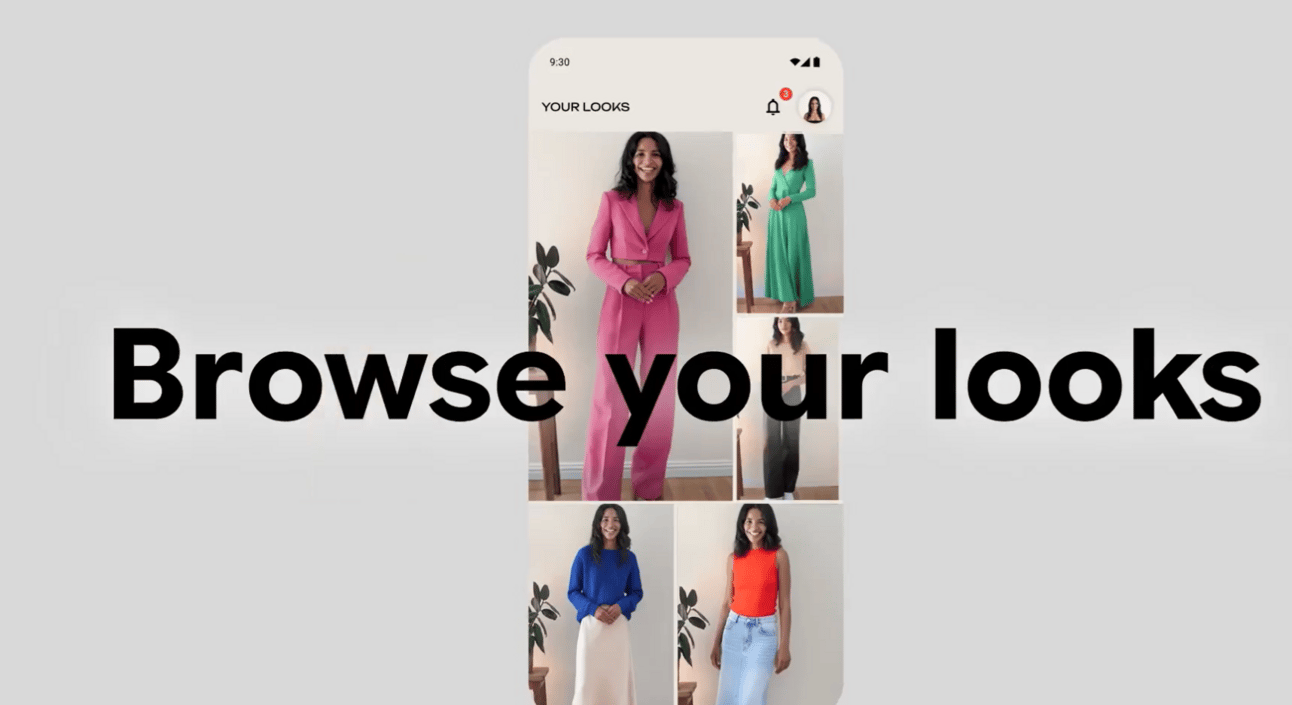

Google Drops Doppl: AI-Powered Style Try-On Lands in Labs

Google Labs has launched Doppl, a fresh experimental app that reimagines fashion discovery. With just a photo, Doppl generates a lifelike animated version of you trying on any outfit—turning style exploration into a fun, dynamic experience. Whether it’s a screenshot from social, a snap from a thrift store, or your dream runway look, Doppl lets you visualize it instantly.

Source: Google

Key Highlights:

Upload a photo and see how any outfit might look on your animated self—no dressing room required.

Static images come alive, letting you feel the flow and fit of an outfit through dynamic motion.

Try on styles from real-world photos, online stores, or screenshots with Doppl’s flexible input support.

As a Google Labs experiment, Doppl is still learning—user insights will shape its next-gen fashion smarts.

Now on iOS & Android in the U.S.—completely free to explore.

Doppl brings fashion closer to you—literally. It’s AI meets style, with a try-on tool that makes personal expression more visual, social, and inspiring than ever.

OPENAI

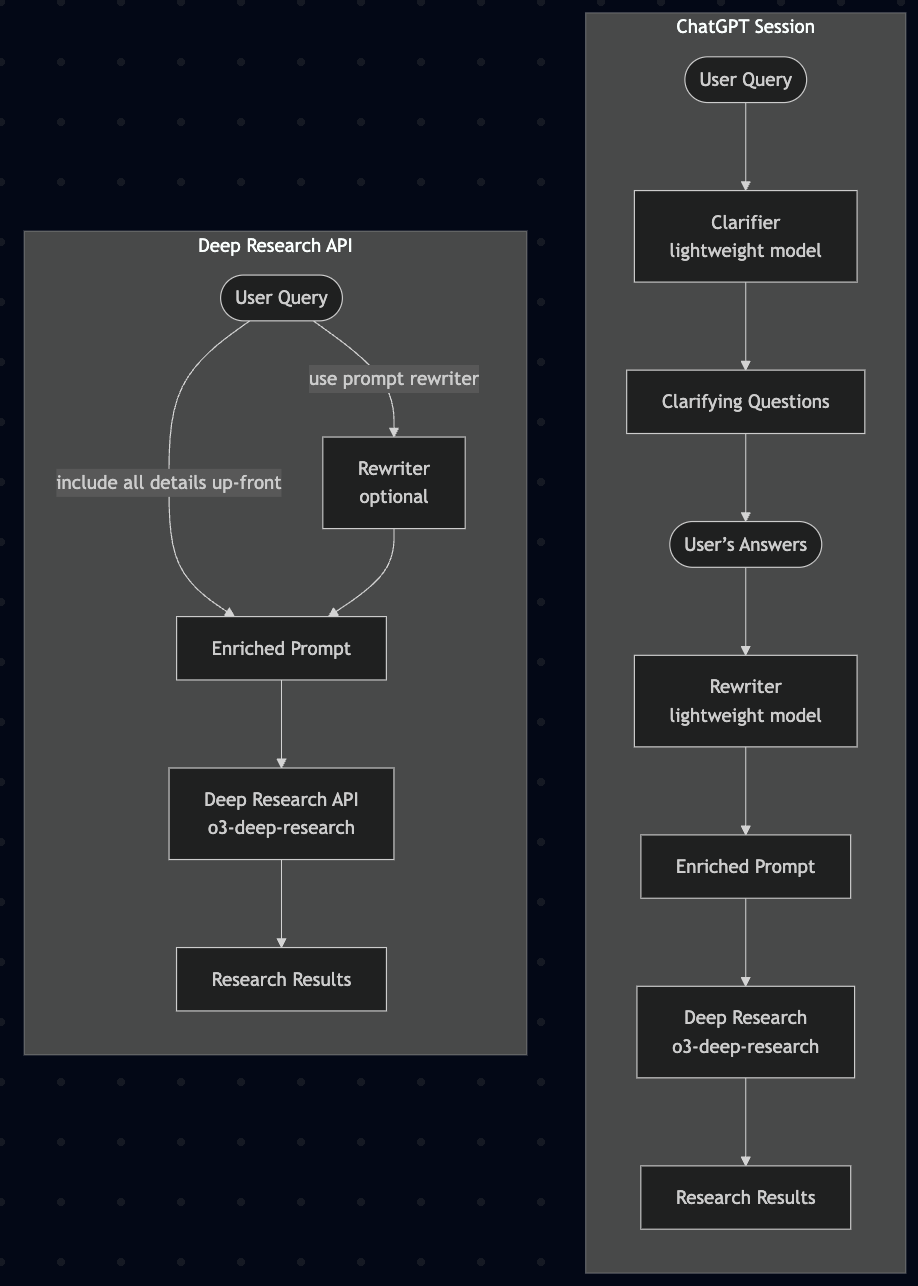

Deep Research API Cookbook: Automate Real-World Research with OpenAI

OpenAI just dropped a powerful tool for developers and analysts: the Deep Research API—designed to generate structured, citation-rich reports from a single query. Unlike ChatGPT, this API exposes the full research process—reasoning, search, code, and synthesis—making it ideal for complex planning, market analysis, and evidence-backed reporting.

Source: OpenAI

Key Highlights:

The model autonomously decomposes your query into sub-questions, searches the web, runs code (if needed), and returns a structured, sourced report.

Use

o3-deep-researchfor full-scale synthesis or the lightweighto4-mini-deep-researchfor faster tasks.Pull in your private data via the Model Context Protocol (MCP) to blend internal documents with external findings.

Easily parse annotations, citations, intermediate steps, and generate charts or tables from the reasoning flow.

Comes with a ready-to-use Cookbook—walkthroughs to build market reports, travel planners, or healthcare research bots in minutes.

Whether you're evaluating semaglutide's impact or scoping out competitor strategies, Deep Research brings powerful synthesis to your workflow—one query at a time.

FROM SUPERHUMAN AI

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.

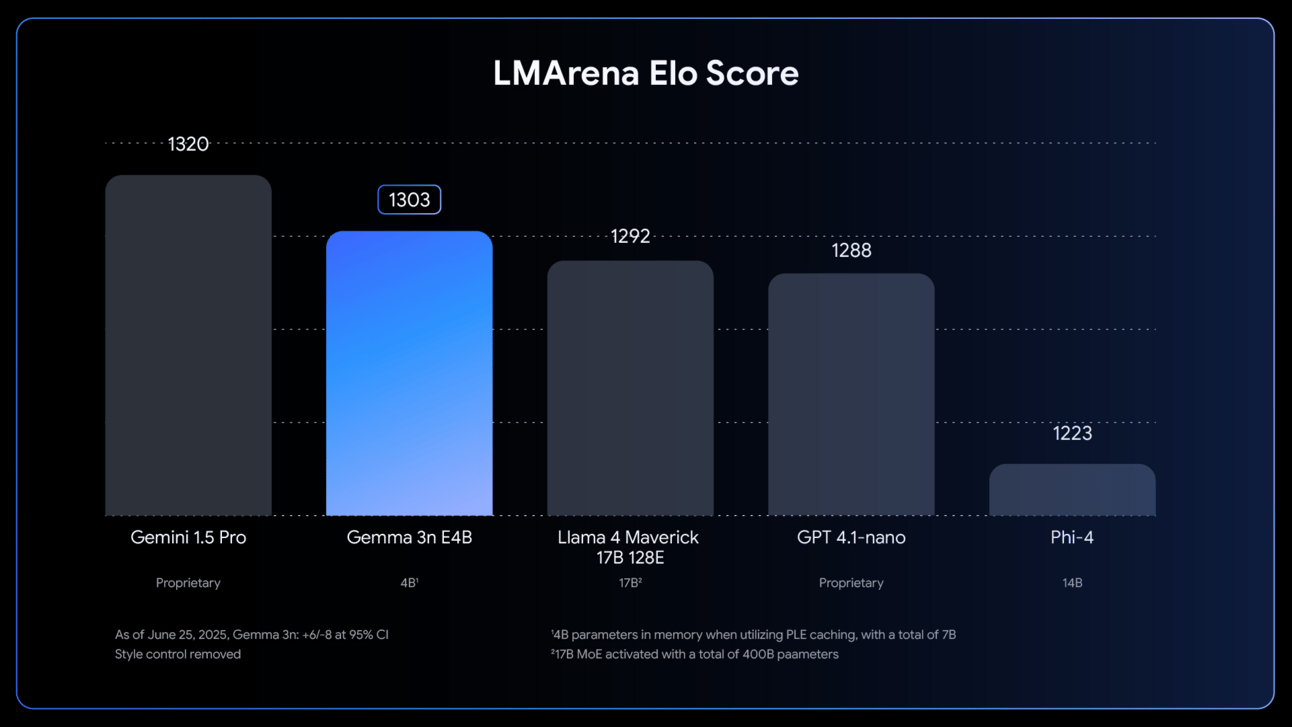

Gemma 3n Powers Up: Google’s Mobile-First AI Model Goes Multimodal

Google is redefining edge AI with the launch of Gemma 3n, a mobile-first model that brings massive multimodal muscle—text, image, audio, and video—to devices with as little as 2GB of memory. With over 160M downloads in the Gemmaverse, the new release is fine-tuned for real-world dev workflows, backed by Hugging Face, llama.cpp, Google AI Edge, and more.

Source: Google

Google's Gemma 3n revolutionizes on-device AI with multimodal support for text, images, audio, and video. Optimized for edge performance, it comes in E2B (2GB VRAM) and E4B (3GB) sizes using the MatFormer architecture. Developers can customize models with Mix-n-Match, and Per-Layer Embeddings enhance memory efficiency. The MobileNet-V5 encoder supports vision tasks up to 768×768 resolution. Supporting 140+ languages, Gemma 3n is Google's most advanced edge AI model.

SUNO

Suno x WavTool: AI Music Production Levels Up

Suno is doubling down on pro-grade music creation with its acquisition of WavTool—an advanced browser-based DAW combining real-time AI editing with studio-class tools. The move brings WavTool’s elite team and core tech under Suno’s roof, just weeks after Suno’s new song editor rollout.

Source: SunoMusic

With built-in AI tools like stem separation, auto MIDI, and a real-time music chatbot, WavTool makes studio-quality production accessible from anywhere. Now part of Suno’s lineup, the merger puts WavTool’s engineering leadership at the helm—accelerating intuitive, AI-first music tools for artists at every level.

WITH HUBSPOT

Ready to save precious time and let AI do the heavy lifting?

Save time and simplify your unique workflow with HubSpot’s highly anticipated AI Playbook—your guide to smarter processes and effortless productivity.

AI BYTES

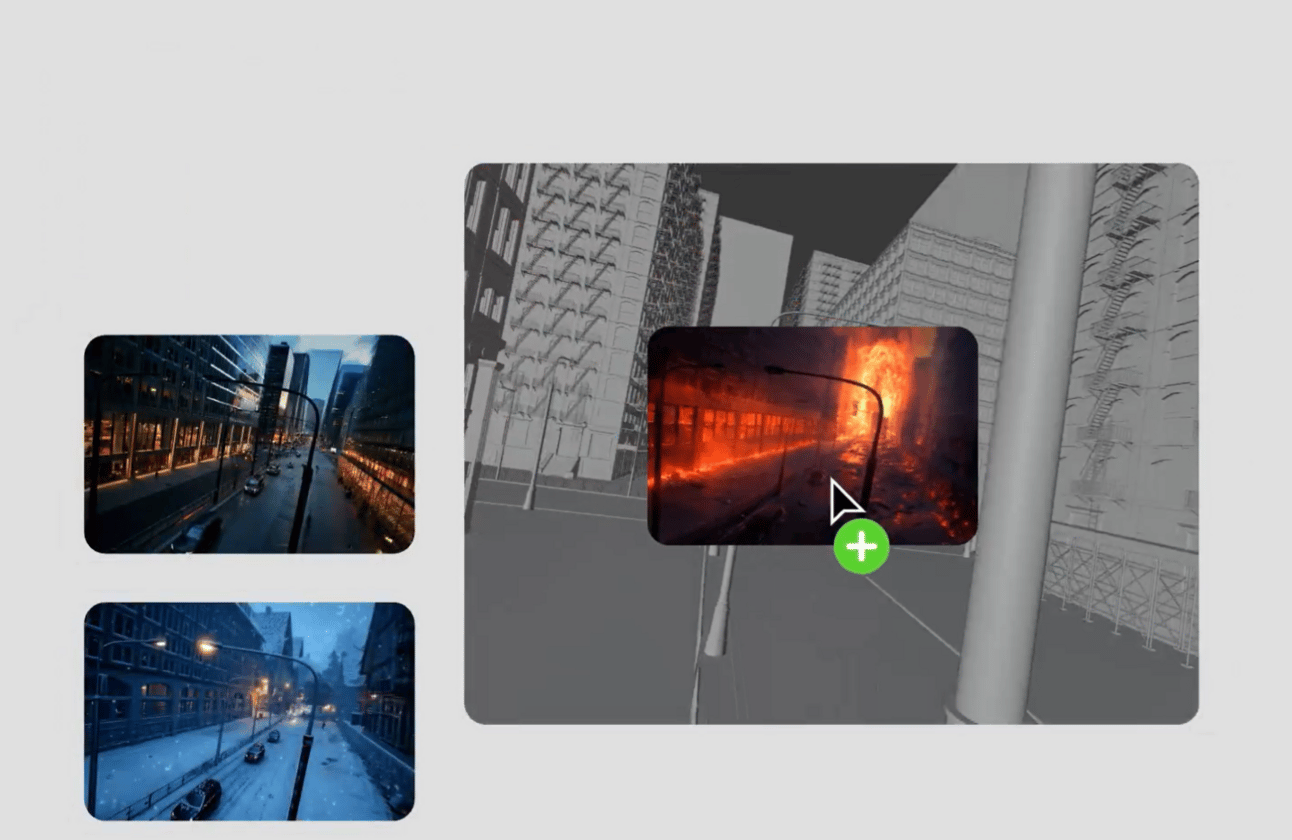

Luma Launches AI-Powered Modify Video API for Cinematic Editing

Source: LumaLabs

Luma AI just dropped its Modify Video API, giving developers powerful new tools to transform user-uploaded clips into cinematic, stylized, or completely reimagined visuals using custom workflows. Now available via SDKs in Python and JavaScript, the API supports dynamic video modification at scale—perfect for powering creative AI apps, visual storytelling, or real-time content remixing.

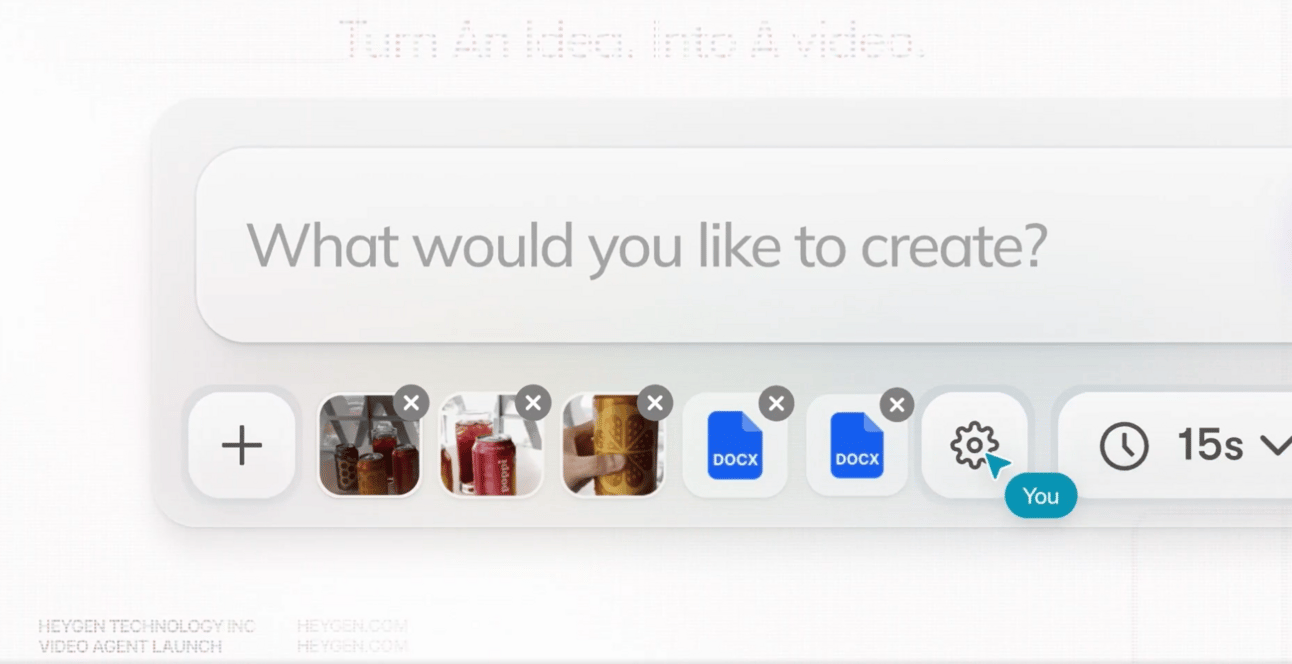

HeyGen Debuts AI Video Agent

Source: HeyGen

HeyGen has launched Video Agent, an AI-powered creative OS that turns text, footage, or even a sentence into a fully edited video—complete with script, shots, actors, and motion. Designed for ads, TikToks, product demos, and more, it acts like a virtual creative team in your pocket.

WITH INSTAHEADSHOTS AI

Don’t settle for an average profile photo

Look like someone worth hiring. Upload a few selfies and let our InstaHeadshots AI generate stunning, professional headshots—fast, easy, and affordably.

AI HEALTH

Inside Issue #54: Smarter Drug Trials with NVIDIA AI

NVIDIA’s AI agents are now streamlining clinical research—cutting timelines from weeks to days. Partnering with IQVIA, these orchestrator agents automate trial tasks and supercharge pharma productivity, marking a bold shift in how new treatments reach patients faster.

Also in This Issue

Explore how AI is enhancing mental health for older adults, protecting UAE hospitals with real-time cybersecurity, and saving 15K+ hours a month through hospital automation.

Issue #54 is packed with real-world breakthroughs—don’t miss it.

Discover how AI is changing health forever. Subscribe FREE & stay ahead in health tech.

Interested in AIHealthTech Insider?Are you interested in receiving the AIHealthTech Insider newsletter directly to your inbox? Stay updated on the latest AI-driven healthcare innovations. |

AI CREATIVITY

Master Keyframes in Luma AI Ray2

In this tutorial, you’ll learn how to use Luma AI’s Keyframes feature to create precise, cinematic AI videos by setting starting and ending frames.

Step-by-step:

Access Dream Machine

Set Your Keyframes

Add a Text Prompt (Optional)

Generate and Review Your Video

Example:

Starting Frame: A park covered in winter snow.

Ending Frame: The same park in autumn with golden leaves falling.

Reply